- Home

- Richard Seymour

The Twittering Machine Page 2

The Twittering Machine Read online

Page 2

Writing is not all we are doing. Much of the time is spent consuming video content, for example, or purchasing quirky products. But even here, as we’ll see, the logic of algorithms means that we have often, in a sense, written the content, collectively. This is what ‘big data’ allows: we are writing even when searching, scrolling, hovering, watching and clicking through. In the strange world of algorithm-driven products, videos, images and websites – everything from violent, eroticized, animated fantasies aimed at children on YouTube to ‘Keep Calm and Rape’ t-shirts – unconscious desires recorded in this way are written into the new universe of commodities.13 This is the ‘modern calculating machine’ that Lacan spoke of: a machine ‘far more dangerous than the atom bomb’ because it can defeat any opponent by calculating, with sufficient data, the unconscious axioms that govern a person’s behaviour.14 We write to the machine, it collects and aggregates our desires and fantasies, segments them by market and demographic and sells them back to us as a commodity experience.

And insofar as we are writing more and more, it has become just another part of our screened existence. To talk about social media is to talk about the fact that our social lives are more and more mediated. Online proxies for friendship and affection – ‘likes’, and so on – significantly reduce the stakes of interacting, while also making interactions far more volatile.

V.

The social industry giants like to claim that there is nothing wrong with the tech that can’t be fixed by the tech. No matter what the problem, there’s a tool for that: their equivalent of ‘one weird trick’.

Facebook and Google have invested in tools to detect ‘fake news’, while Reuters has developed its own proprietary algorithm for locating falsehoods. Google has funded a UK start-up, Factmata, to develop tools for automatically checking facts – such as, say, economic growth figures, or the numbers of immigrants arriving in the USA last year. Twitter uses tools created by IBM Watson to target cyberbullying, while a Google project, Conversation AI, promises to detect aggressive users with sophisticated AI technology. And as depression and suicide become more common, Facebook CEO Mark Zuckerberg announced new tools to combat depression, with Zuckerberg even claiming that AI could spot suicidal tendencies in a user before a friend would.

But the social industry giants are increasingly caught out by a growing number of defectors, who have expressed regret over the tools they helped create. Chamath Palihapitiya, a Canadian venture capitalist with philanthropic leanings, is a former Facebook executive with a guilty conscience. Tech capitalists, he says, have ‘created tools that are ripping apart the social fabric of how society works’. He blames the ‘short-term, dopamine-driven feedback loops’ of social industry platforms for promoting ‘misinformation, mistruth’ and giving manipulators access to an invaluable tool.15 It’s so bad, he says, that his children ‘aren’t allowed to use that shit’.

You might be tempted to think that whatever dark side the social industry has is an accidental by-product, like a spandrel. You would be wrong. Sean Parker, the Virginia-born billionaire hacker and inventor of the file-sharing site Napster, was an early investor in Facebook and the company’s first president.16 Now he’s a ‘conscientious objector’. Social media platforms, he explains, rely on a ‘social validation feedback loop’ to ensure that they monopolize as much of the user’s time as possible. This is ‘exactly the kind of thing that a hacker like myself would come up with, because you’re exploiting a vulnerability in human psychology. The inventors, creators . . . understood this consciously. And we did it anyway.’ The social industry has created an addiction machine, not as an accident, but as a logical means to return value to its venture capitalist investors.

It was another former Twitter adviser and Facebook executive, Antonio García Martínez, who explained the potential political ramifications of this.17 García Martínez, the son of Cuban exiles who made his fortune on Wall Street, was a product manager for Facebook. Like Parker and Palihapitiya, he casts an unflattering light on his former employers. He stresses Facebook’s ability to manipulate its users. In May 2017, it emerged, through leaked documents published in The Australian, that Facebook executives were discussing with advertisers how they could use their algorithms to identify and manipulate teenagers’ moods. Stress, anxiety, feelings of failure were all picked up by Facebook’s tools. According to García Martínez, the leaks were not only accurate but had political consequences. With enough data, Facebook could identify a demographic and hammer it with advertising: the ‘click-through rate’ never lies. But it could also, as a running joke in the company acknowledged, easily ‘throw the election’ by simply running a reminder to vote in key areas on election day.

This situation is completely without precedent, and it is now evolving so quickly that we can barely keep track of where we are. And the more technology evolves, the more that new layers of hardware and software are added, the harder it is to change. This is handing tech capitalists a unique source of power. As the Silicon Valley guru Jaron Lanier puts it, they don’t have to persuade us when they can directly manipulate our experience of the world.18 Technologists augment our senses with webcams, smartphones and constantly expanding quantities of digital memory. Because of this, a tiny group of engineers can ‘shape the entire future of human experience with incredible speed’.

We are writing, and as we write, we are being written. More accurately, as a society we are becoming hard-written, so that we cannot press delete without gravely disrupting the system as a whole. But what sort of future are we writing ourselves into?

VI.

In the birthing bloom of the web and instant messaging, we learned that we could all be authors, all published, all with our own public. No one with internet access need be excluded.

And the good news gospel was that this democratisation of writing would be good for democracy. Scripture, text, would save us. We could have a utopia of writing, a new way of life. Almost six hundred years of a stable print culture was ending, and it was going to turn the world upside down.

We would enjoy ‘creative autonomy’, freed from the monopolies of old media and their one-way traffic of meaning.19 We would find new forms of political engagement instead of parties, connected by arborescent online networks. Multitudes would suddenly swarm and descend on the powerful, and then dissipate just as quickly, before they could be sanctioned. Anonymity would allow us to form new identities freed from the limits of our everyday lives, and escape surveillance. There were a host of so-called ‘Twitter revolutions’, misleadingly credited to the ability of educated social industry users to outflank senile dictatorships, and discredit the ‘elderly rubbish’ they spoke.

And then, somehow, this techno-utopianism returned in an inverted form. The benefits of anonymity became the basis for trolling, ritualized sadism, vicious misogyny, racism and alt-right subcultures. Creative autonomy became ‘fake news’ and a new form of infotainment. Multitudes became lynch mobs, often turning on themselves.20 Dictators and other authoritarians learned how to use Twitter and master its seductive language games, as did the so-called Islamic State whose slick online media professionals affect mordant and hyper-aware tones. The United States elected the world’s first ‘Twitter president’. Cyber-idealism became cyber-cynicism.

And the silent behemoth lurking behind all this was the network of global corporations, public-relations firms, political parties, media companies, celebrity avatars and others responsible for most of the traffic and attention. They too, rather like the advanced cyborg in Terminator 2, have managed pitch-perfect emulation of human voices, insouciant, ironic and intimate. Legal persons according to US law, these corporations also have carefully produced personalities: they miss you, they love you, they just want to make you laugh: please come back.

Meanwhile publicity, taken to the level of a new art form for those with the resources to make the most of it, is a poisoned chalice for almost everyone else. If the social industry is an addiction machine, the ad

dictive behaviour it is closest to is gambling: a rigged lottery. Every gambler trusts in a few abstract symbols – the dots on a dice, numerals, suits, red or black, the graphemes on a fruit machine – to tell them who they are. In most cases, the answer is brutal and swift: you are a loser and you are going home with nothing. The true gambler takes a perverse joy in anteing up, putting their whole being at stake. On social media, you scratch out a few words, a few symbols, and press ‘send’, rolling the dice. The internet will tell you who you are, and what your destiny is through arithmetic ‘likes’, ‘shares’ and ‘comments’.

The interesting question is what it is that is so addictive. In principle, anyone can win big; in practice, not everyone is playing with the same odds. Our social industry accounts are set up like enterprises competing for eyeball attention. If we are all authors now, we write, not for money, but for the satisfaction of being read. Going viral, or ‘trending’, is the equivalent of a windfall. But sometimes, ‘winning’ is the worst thing that can happen. The temperate climate of ‘likes’ and approval is apt to break, lightning-quick, into sudden storms of fury and disapproval. And if ordinary users are ill-equipped to make the best of ‘going viral’, they also have few resources to weather the storms of negative publicity, which can include anything from doxing – maliciously publishing private information – to ‘revenge porn’. We may be treated as if we are micro-enterprises, but we are not corporations with public-relations budgets or social industry managers. Even wealthy celebrities can find themselves permanently damaged by tabloid attacks – so how is someone tweeting on the train, and during toilet breaks at work, supposed to cope with the internet’s devolved form of tabloid scandal and bottom-feeding culture?

A 2015 study looked into the reasons why people who try to quit the social industry fail.21 The survey data came from a group of people who had signed up to quit Facebook for just ninety-nine days. Many of these determined quitters couldn’t even make the first few days. And many of those who successfully quit had access to another social networking site, like Twitter, so that they had simply displaced their addiction. Those who stayed away, however, were typically in a happier frame of mind, and less interested in controlling how other people thought of them, thus implying that social media addiction is partly a self-medication for depression and partly a way of curating a better self in the eyes of others. Indeed, these two factors may not be unrelated.

For those who are curating a self, social media notifications work as a form of clickbait.22 Notifications light up the ‘reward centres’ of the brain, so that we feel bad if the metrics we accumulate on our different platforms don’t express enough approval. The addictive aspect of this is similar to the effect of poker machines or smartphone games, recalling what cultural theorist Byung-Chul Han calls the ‘gamification of capitalism’.23 But it is not only addictive. Whatever we write has to be calibrated for social approval. Not only do we aim for conformity among our peers but, to an extent, we only pay attention to what our peers write insofar as it allows us to write something in reply, for the ‘likes’. Perhaps this is what, among other things, gives rise to what is often derided as ‘virtue-signalling’, not to mention the ferocious rows, overreactions, wounded amour propre and grandstanding that often characterize social industry communities.

Yet, we are not Skinner’s rats. Even Skinner’s rats were not Skinner’s rats:24 the patterns of addictive behaviour displayed by rats in the ‘Skinner Box’ were only displayed by rats in isolation, outside of their normal sociable habitat. For human beings, addictions have subjective meaning, as does depression. Marcus Gilroy-Ware’s study of social media suggests that what we encounter in our feeds is hedonic stimulation, various moods and sources of arousal – from outrage porn to food porn to porn – which enable us to manage our emotions.25 In addition to that, however, it’s also true that we can become attached to the miseries of online life, a state of perpetual outrage and antagonism. There is a sense in which our online avatar resembles a ‘virtual tooth’ in the sense described by the German surrealist artist Hans Bellmer.26 In the grip of a toothache, a common reflex is to make a fist so tight that the fingernails bite into the skin. This ‘confuses’ and ‘bisects’ the pain by creating a ‘virtual centre of excitation’, a virtual tooth that seems to draw blood and nervous energy away from the real centre of pain.

If we are in pain, this suggests, self-harming can be a way of displacing it so that it appears lessened – even though the pain hasn’t really been reduced, and we still have a toothache. So if we get hooked on a machine that purports to tell us, among other things, how other people see us – or a version of ourselves, a delegated online image – that suggests something has already gone wrong in our relationships with others. The global rise in depression – currently the world’s most widespread illness, having risen some 18 per cent since 2005 – is worsened for many people by the social industry.27 There is a particularly strong correlation between depression and the use of Instagram among young people. But social industry platforms didn’t invent depression; they exploited it. And to loosen their grip, one would have to explore what has gone wrong elsewhere.

VII.

If the social industry is an attention economy, its payoffs distributed in the manner of a casino, winning can be the worst thing that happens to someone. As many users have found to their cost, not all publicity is good publicity.

In 2013, a forty-eight-year-old bricklayer from Hull in the north of England was found hanging, dead, in a cemetery. Steven Rudderham had been targeted by an anonymous group of vigilantes on Facebook who had decided that he was a paedophile.28 For no good reason, someone had copied his profile image and made a banner with it, accusing him of being a ‘dirty perv’. It took fifteen minutes for it to be shared hundreds of times; and three days of hate mail, and death and castration threats, for Rudderham to kill himself.

Only a few days previously, it emerged, Chad Lesko of Toledo, Ohio had been repeatedly assaulted by police and abused by local residents because they thought he was wanted for the rape of three girls and his young son.29 The false accusation came from a dummy account set up by his ex-girlfriend. Ironically, Lesko had himself been abused by his father. Such mobbing, increasingly common on the social industry, is not always the result of conscious malice. Garnet Ford of Vancouver, and Triz Jefferies of Philadelphia, were both witch-hunted by social media because they were confused with wanted criminals.30 Ford lost his job and Jefferies was hounded by a mob at his home.

These examples may be extreme, but they touch on a number of well-known problems exacerbated by the medium, from ‘fake news’, to trolling and bullying, to depression and suicide. And they raise fundamental questions about how the social industry platforms work. Why, for example, were so many people disposed to believe the ‘fake news’, as it were? Why was no one able to stop the crowd in their tracks and point out the vindictive lunacy of their actions? What sort of satisfaction did the participants expect to get out of it other than the schadenfreude of watching someone go down, even to their death?

While the social industry is perceived as, and can be, a great leveller, it can also simply invert the usual hierarchies of authority and factual sourcing. Those who joined lynch mobs had nothing to authorize the beliefs they acted on other than someone’s say so. The more anonymous the accusations were, the more effective they were. Anonymity detaches the accusation from the accuser and any circumstances, contexts, personal histories or relationships that might give anyone a chance to evaluate or investigate it. It allows the logic of collective outrage to take over. It no longer matters, beyond a certain point, whether the individual participants are ‘really’ outraged. The accusation is outraged on their behalf. It has a life of its own: a rolling, aimless, omnidirectional wrecking ball; a voice, seemingly, without a body; a harassment without a harasser; a virtual Witchfinder General. Standards of veracity are not only inverted, but detached from the traditional notion of the person as the source of testimoni

al truth.

A false accusation is a particular type of ‘fake news’. It involves matters of justice, and summons people to take sides. And since most people have no idea what is happening, no one is in a position to mount a defence of the accused. This leaves observers with the choice of maintaining a worried silence, or ducking for cover within the mob thinking, ‘there but for the grace of God . . . ’. At least, in the latter case, you get some ‘likes’ for your trouble.

The social industry did not invent the lynch mob, or the show trial. The vigilantes were out looking for alleged paedophiles, rapists and murderers to torment long before the advent of Twitter. People took pleasure in believing untruths before they were able to get them sent directly to their smartphones. Office politics and homes are filled with a version of the whispering campaigns and bullying that we see online. To disarm the online lynch mobs, trolls and bullies would be to work out why these behaviours are so prevalent elsewhere.

What, then, has the social industry changed? It has certainly made it easier for the average person to disseminate falsehoods, for random bullies to swarm on targets and for anonymized misinformation to spread lightning-quick. Above all, however, the Twittering Machine has collectivized the problem in a new way.

VIII.

In 2006, a thirteen-year-old boy named Mitchell Henderson killed himself.31 In the days that followed, his family, friends and relatives congregated on his MySpace page, leaving virtual tributes to the dearly departed.

The Twittering Machine

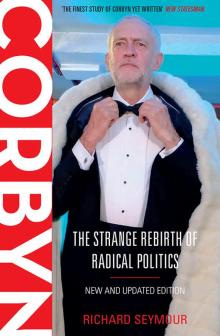

The Twittering Machine Corbyn

Corbyn